同學要做

像是打AA BB CC

文字游標在CC之後

按ctrl+A把DatagridView顯示在文字游標旁

DatagridView會顯示資料庫裡開頭是CC的值(假設是CCDD

然後選擇CCDD後

會取代掉CC

變成AA BB CCDD

@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@@

我幫忙的部分沒有資料庫那一塊

所以就是

像是打AA BB CC

文字游標在CC之後

按ctrl+A把DatagridView顯示在文字游標旁

然後有兩種

1.

同學做的是用取代的方式

按DatagridView會把CC取代成我要的(假設是CCDD)

小問題是如果前面也有CC

如AA BB CC AA CC

我只要取代後面的CC但是前面的也會一起被取代

變成AA BB CCDD AA CCDD

2.

我做的放在Button

用 刪除字(空白後());插入字串();

也可以把CC取代成我要的

但是小問題是換行不算空白

所以會連前一行的最後一個字串一起刪掉

------------------------------------------------------------------------------------------------------------------------------------

string cmdText, insertText, txt;

string[] stringArray;

int txt1;

Point p, P;

private void textBox1_KeyDown(object sender, KeyEventArgs e)//按ctrl+A把DatagridView顯示在文字游標旁

{

if (e.KeyCode == Keys.A && e.Control == true)

{

if (e.KeyCode == Keys.A)

{

p = GetCursorPos(this.textBox1.Handle.ToInt32());

this.label1.Text = string.Format("Line:{0}, Col:{1}", p.X, p.Y);

P.X = (p.Y) * (int)textBox1.Font.Size + textBox1.Location.X ;

P.Y = (p.X+1) * (int)textBox1.Font.Size + textBox1.Location.Y;

dataGridView1.Location = P;

dataGridView1.Show();

//抓空白

stringArray = textBox1.Text.Split(' ', '\n');

//抓陣列最後一個字串

txt = stringArray[stringArray.Length - 1];

}

}

}

//

#region 游標在第幾行第幾個

const int EM_GETSEL = 0xB0;

const int EM_LINEFROMCHAR = 0xC9;

const int EM_LINEINDEX = 0xBB;

[DllImport("user32.dll", EntryPoint = "SendMessage")]

public static extern int SendMessage( int hwnd, int wMsg,int wParam,ref int lParam );

public Point GetCursorPos(int TextHwnd)

{

int i = 0, j = 0, k = 0;

int lParam = 0, wParam = 0;

i = SendMessage(TextHwnd, EM_GETSEL, wParam, ref lParam);

j = i / 65536;

int lineNo = SendMessage(TextHwnd, EM_LINEFROMCHAR, j, ref lParam) + 1;

k = SendMessage(TextHwnd, EM_LINEINDEX, -1, ref lParam);

int colNo = j - k + 1;

Point ret = new Point(lineNo, colNo);

return ret;

}

#endregion

private void dataGridView1_CellClick(object sender, DataGridViewCellEventArgs e)

{

//將選取內容插入鼠標位置

string insertText = "選取內容";

int insertPos = textBox1.SelectionStart;

//刪除游標所在的陣列

textBox1.Text = textBox1.Text.Replace(txt, insertText);

textBox1.SelectionStart = insertPos + insertText.Length;

//關閉dataGridView

dataGridView1.Visible = false;

}

private void button1_Click(object sender, EventArgs e)//刪除游標前一個字元加入我要的字串

{

刪除字(空白後());

插入字串();

}

public void 刪除字(int CC)

{

int insertPos = textBox1.SelectionStart;

char[] c = textBox1.Text.ToCharArray();

textBox1.Text = "";

for(int y=0;y< CC;y++)

c[insertPos - 1-y] = ' ';

for (int i = 0; i < c.Length; i++)

{

bool BB = true;

for (int y = 0; y < CC; y++)

if (i == insertPos - 1 - y)

{

BB = false;

break;

}

if (BB)

textBox1.Text += c[i];

}

}

public int 空白後()

{

int CC = 0;

int insertPos = textBox1.SelectionStart;

insertPos--;

char[] c = textBox1.Text.ToCharArray();

while(c[insertPos--]!=' ')

{

CC++;

}

return CC;

}

public void 插入字串()

{

string insertText = "";

int insertPos = textBox1.SelectionStart;

textBox1.Text = textBox1.Text.Insert(insertPos, insertText);

textBox1.SelectionStart = insertPos + insertText.Length;

}

2016年8月31日 星期三

由txt檔讀取數值繪製波形

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

namespace HRV_波形

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

float ff;

List<int> ii = new List<int>();//動態陣列

private void Form1_Load(object sender, EventArgs e)

{

StreamReader sr = new StreamReader(@"E:\raw.txt");

//===一次讀取全部內容===

string a = sr.ReadToEnd();

richTextBox1.Text = a;//顯示在textbox

sr.Close();

//

sr = new StreamReader(@"E:\raw.txt");

string line;

List<string> iii = new List<string>();

while (!sr.EndOfStream)//一次讀一行(一個值)

{

line = sr.ReadLine();

iii.Add(line);

}

for (int aaa = 0; aaa < iii.Count; aaa++)//由字串轉成數值

{

if (iii[aaa] != "" && iii[aaa] != null)

ii.Add(int.Parse(iii[aaa]));

}

sr.Close();

//設定panel大小

panel2.Width = ii.Count * 2;

panel2.Height = panel1.Height;

ff = panel1.Height / 260;

}

private void panel2_Paint(object sender, PaintEventArgs e)

{

Graphics g = panel2.CreateGraphics();

//

List<Point> MyPoint = new List<Point>();

Pen Mypen = new Pen(Color.Blue);

Point p = new Point();

for (int b = 0; b < ii.Count; b++)

{

int c = ii[b];

p.X = b;

p.Y = 250 - c;

MyPoint.Add(p);

}

for (int b = 1; b < MyPoint.Count; b++)

{

g.DrawLine(Mypen, MyPoint[b - 1], MyPoint[b]);

}

}

private void button1_Click(object sender, EventArgs e)

{

panel2.Invalidate();//重繪

}

}

}

封包解碼

要找出綠色那邊的資料~~

string s, ss, a;

char[] line;

int[,] ll = new int[64, 32];

private void Form1_Load(object sender, EventArgs e)

{

System.IO.StreamReader file =new System.IO.StreamReader(@"E:\實習\鈺婷\1.asc", Encoding.ASCII);//匯入檔案

s = file.ReadToEnd();//讀取檔案到最後

textBox1.Text = s;

line = s.ToCharArray();//字串轉成字元陣列

int x = 0;

for(int i=0;i<line.Length-1;i++)//一個字一個字識別是不是我要的//取得8=XX後的32byte資料

{

ss = line[i].ToString() + line[i+1].ToString();

if (ss=="8=")

{

//

textBox2.Text += line[i].ToString() + line[i + 1].ToString() + line[i + 2].ToString()+ line[i + 3].ToString() + line[i + 4].ToString();//顯示8=XX

//找後面32bytes

for (int y=0;y<32;y++)

{

a += line[i + 5 + y].ToString();

ll[x,y] = line[i + 5 + y];

textBox2.Text += "," + ll[x,y];

}

x += 1;

textBox2.Text += "\r\n";

if (x >= 64)//目前是64筆資料

break;

//

}

}

}

string s, ss, a;

char[] line;

int[,] ll = new int[64, 32];

private void Form1_Load(object sender, EventArgs e)

{

System.IO.StreamReader file =new System.IO.StreamReader(@"E:\實習\鈺婷\1.asc", Encoding.ASCII);//匯入檔案

s = file.ReadToEnd();//讀取檔案到最後

textBox1.Text = s;

line = s.ToCharArray();//字串轉成字元陣列

int x = 0;

for(int i=0;i<line.Length-1;i++)//一個字一個字識別是不是我要的//取得8=XX後的32byte資料

{

ss = line[i].ToString() + line[i+1].ToString();

if (ss=="8=")

{

//

textBox2.Text += line[i].ToString() + line[i + 1].ToString() + line[i + 2].ToString()+ line[i + 3].ToString() + line[i + 4].ToString();//顯示8=XX

//找後面32bytes

for (int y=0;y<32;y++)

{

a += line[i + 5 + y].ToString();

ll[x,y] = line[i + 5 + y];

textBox2.Text += "," + ll[x,y];

}

x += 1;

textBox2.Text += "\r\n";

if (x >= 64)//目前是64筆資料

break;

//

}

}

}

用藍芽收發文件

程式碼 :

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using InTheHand.Net;

using InTheHand.Net.Sockets;

using System.IO;

using System.Threading;

using InTheHand.Net.Bluetooth;

using System.Net.Sockets;

using InTheHand.Windows.Forms;

//using System.Diagnostics;

using InTheHand.Net.Sockets;

using System.IO;

using System.Threading;

using InTheHand.Net.Bluetooth;

using System.Net.Sockets;

using InTheHand.Windows.Forms;

//using System.Diagnostics;

using Brecham.Obex;

using Brecham.Obex.Objects;

using System.Net;

using Brecham.Obex.Objects;

using System.Net;

//using DragAndDropFileControlLibrary;//托放作品

namespace Ex1

{

public partial class Form1 : Form

{

//BluetoothClient client;

//Stack<ListViewItem[]> previousItemsStack = new Stack<ListViewItem[]>();

//ObexClientSession session;

//string localAds;

//BluetoothAddress mac = null;//地址

//BluetoothEndPoint localEndpoint = null;//送出端

//BluetoothClient localClient = null;//接收端

//BluetoothComponent localComponent = null;

//BluetoothAddress mac = null;//地址

//BluetoothEndPoint localEndpoint = null;//送出端

//BluetoothClient localClient = null;//接收端

//BluetoothComponent localComponent = null;

BluetoothRadio radio = null;//蓝牙适配器

string sendFileName = null;//发送文件名

BluetoothAddress sendAddress = null;//发送目的地址

ObexListener listener = null;//监听器

string recDir = null;//接受文件存放目录

Thread listenThread, sendThread;//发送/接收线程

public Form1()

{

string sendFileName = null;//发送文件名

BluetoothAddress sendAddress = null;//发送目的地址

ObexListener listener = null;//监听器

string recDir = null;//接受文件存放目录

Thread listenThread, sendThread;//发送/接收线程

public Form1()

{

radio = BluetoothRadio.PrimaryRadio;//获取当前PC的蓝牙适配器

}

private void Connect()

{

{

using (SelectBluetoothDeviceDialog bldialog = new SelectBluetoothDeviceDialog())// SelectBluetoothDeviceDialog是一个InTheHand.Net.Personal提供的窗体,用于选择蓝牙设备

{

{

if (bldialog.ShowDialog() == DialogResult.OK)

{

sendAddress = bldialog.SelectedDevice.DeviceAddress;//获取选择的远程蓝牙地址

label2.Text = "地址:" + sendAddress.ToString() + " 設備名:" + bldialog.SelectedDevice.DeviceName;

}

}

}

{

sendAddress = bldialog.SelectedDevice.DeviceAddress;//获取选择的远程蓝牙地址

label2.Text = "地址:" + sendAddress.ToString() + " 設備名:" + bldialog.SelectedDevice.DeviceName;

}

}

}

private void sendFile()//发送文件方法

{

ObexWebRequest request = new ObexWebRequest(sendAddress, Path.GetFileName(sendFileName));//创建网络请求

WebResponse response = null;

try

{

button發送.Enabled = false;

request.ReadFile(sendFileName);//发送文件

label發送狀態.Text = "開始發送!";

response = request.GetResponse();//获取回应

label發送狀態.Text = "發送完成!";

}

catch (System.Exception ex)

{

MessageBox.Show("發送失敗!", "提示", MessageBoxButtons.OK, MessageBoxIcon.Warning);

label發送狀態.Text = "發送失敗!";

}

finally

{

if (response != null)

{

response.Close();

button發送.Enabled = true;

}

}

}

{

ObexWebRequest request = new ObexWebRequest(sendAddress, Path.GetFileName(sendFileName));//创建网络请求

WebResponse response = null;

try

{

button發送.Enabled = false;

request.ReadFile(sendFileName);//发送文件

label發送狀態.Text = "開始發送!";

response = request.GetResponse();//获取回应

label發送狀態.Text = "發送完成!";

}

catch (System.Exception ex)

{

MessageBox.Show("發送失敗!", "提示", MessageBoxButtons.OK, MessageBoxIcon.Warning);

label發送狀態.Text = "發送失敗!";

}

finally

{

if (response != null)

{

response.Close();

button發送.Enabled = true;

}

}

}

private void buttonListen_Click(object sender, EventArgs e)//开始/停止监听

{

if (listener == null || !listener.IsListening)

{

radio.Mode = RadioMode.Discoverable;//设置本地蓝牙可被检测

listener = new ObexListener(ObexTransport.Bluetooth);//创建监听

listener.Start();

if (listener.IsListening)

{

buttonListen.Text = "停止";

label1.Text = "開始監聽";

listenThread = new Thread(receiveFile);//开启监听线程

listenThread.Start();

}

}

else

{

listener.Stop();

buttonListen.Text = "監聽";

label1.Text = "停止監聽";

}

}

{

if (listener == null || !listener.IsListening)

{

radio.Mode = RadioMode.Discoverable;//设置本地蓝牙可被检测

listener = new ObexListener(ObexTransport.Bluetooth);//创建监听

listener.Start();

if (listener.IsListening)

{

buttonListen.Text = "停止";

label1.Text = "開始監聽";

listenThread = new Thread(receiveFile);//开启监听线程

listenThread.Start();

}

}

else

{

listener.Stop();

buttonListen.Text = "監聽";

label1.Text = "停止監聽";

}

}

private void receiveFile()//收文件方法

{

ObexListenerContext context = null;

ObexListenerRequest request = null;

while (listener.IsListening)

{

context = listener.GetContext();//获取监听上下文

if (context == null)

{

break;

}

request = context.Request;//获取请求

string uriString = Uri.UnescapeDataString(request.RawUrl);//将uri转换成字符串

string recFileName = recDir + uriString;

request.WriteFile(recFileName);//接收文件

}

}

{

ObexListenerContext context = null;

ObexListenerRequest request = null;

while (listener.IsListening)

{

context = listener.GetContext();//获取监听上下文

if (context == null)

{

break;

}

request = context.Request;//获取请求

string uriString = Uri.UnescapeDataString(request.RawUrl);//将uri转换成字符串

string recFileName = recDir + uriString;

request.WriteFile(recFileName);//接收文件

}

}

private void button1_Click(object sender, EventArgs e)//連接藍芽

{

Connect();

}

{

Connect();

}

private void button選擇文件_Click(object sender, EventArgs e)

{

OpenFileDialog opp=new OpenFileDialog();

if (opp.ShowDialog() == DialogResult.OK)

{

sendFileName = opp.FileName;

label發送文件名.Text = "文件:" + sendFileName;

}

}

{

OpenFileDialog opp=new OpenFileDialog();

if (opp.ShowDialog() == DialogResult.OK)

{

sendFileName = opp.FileName;

label發送文件名.Text = "文件:" + sendFileName;

}

}

private void button發送_Click(object sender, EventArgs e)

{

sendFile();

}

{

sendFile();

}

private void button選擇目錄_Click(object sender, EventArgs e)

{

FolderBrowserDialog opd = new FolderBrowserDialog();

opd.Description = "请选择蓝牙接收文件的存放路径";

if (opd.ShowDialog() == DialogResult.OK)

{

recDir = opd.SelectedPath;

label接收目錄.Text = recDir;

}

}

{

FolderBrowserDialog opd = new FolderBrowserDialog();

opd.Description = "请选择蓝牙接收文件的存放路径";

if (opd.ShowDialog() == DialogResult.OK)

{

recDir = opd.SelectedPath;

label接收目錄.Text = recDir;

}

}

}

}

之前玩藍芽器材的時候做練習的

痾這好像是度娘的資源哈哈

總之程式設計就是一種練習找資料的事情厄

筆電攝像鏡頭運用+臉部偵測_emguCV

本來要學學姊做的範例

因為學姊範例不知道是版本問題還是怎

不能複製貼上

所以又參考了估狗大神

我總共試做了7個

有兩個是失敗的

camera01 可攝影

camera02 失敗

使用VS內emgu套件 無法使用

使用VS內emgu套件 無法使用

camera03 可攝影 可截圖 可存檔

camera04 失敗

camera05 照片可找臉

camera06 攝影截圖可找臉

camera07 可攝影同時找臉

重要步驟:

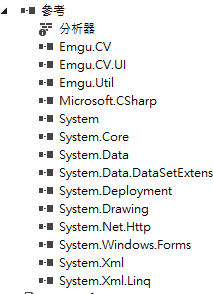

1.使用自己安裝的emgu(emgucv-windows-universal 3.0.0.2157) (之後再試著直接用visualstudio的套件,目前使用套件emguCV是失敗的)

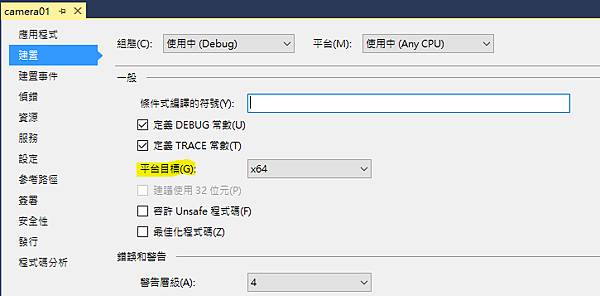

2.更改 專案名稱右鍵->屬性->建置->平台 改為x64

3.增加參考(前三個那個

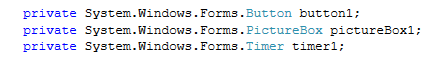

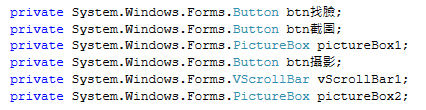

camera01 可攝影

設計頁面:

程式碼:

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using Emgu.CV;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.Structure;

using Emgu.Util;

namespace camera01

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

private Capture _capture;

private bool _captureInProgress;

int i = 0;

private void ProcessFrame(object sender, EventArgs arg)

{

Mat frame= _capture.QueryFrame();

//Image<Bgr, Byte> frame = _capture.QueryFrame();

}

private void button1_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (_capture == null)

{

try

{

_capture = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

private Capture _capture;

private bool _captureInProgress;

int i = 0;

private void ProcessFrame(object sender, EventArgs arg)

{

Mat frame= _capture.QueryFrame();

//Image<Bgr, Byte> frame = _capture.QueryFrame();

}

private void button1_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (_capture == null)

{

try

{

_capture = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

if (_capture != null)

{

if (_captureInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

button1.Text = "Start Capture";

timer1.Enabled = false;

}

else

{

//start the capture

button1.Text = "Stop";

Application.Idle += new EventHandler(ProcessFrame);

timer1.Enabled = true;

}

_captureInProgress = !_captureInProgress;

}

}

{

if (_captureInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

button1.Text = "Start Capture";

timer1.Enabled = false;

}

else

{

//start the capture

button1.Text = "Stop";

Application.Idle += new EventHandler(ProcessFrame);

timer1.Enabled = true;

}

_captureInProgress = !_captureInProgress;

}

}

private void timer1_Tick(object sender, EventArgs e)

{

pictureBox1.Image = _capture.QueryFrame().Bitmap;

}

}

}

{

pictureBox1.Image = _capture.QueryFrame().Bitmap;

}

}

}

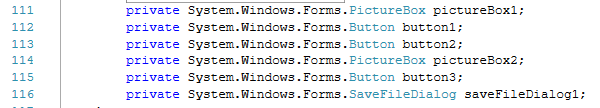

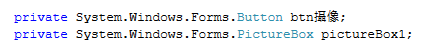

camera03 可攝影 可截圖 可存檔

設計頁面:

程式碼 :

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using Emgu.CV;

using Emgu.CV.Structure;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

using System.IO;

using Emgu.CV;

using Emgu.CV.Structure;

namespace camera03

{

public partial class Form1 : Form

{

public Capture cap;

private bool capInProgress;

int i = 0;

public Form1()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

pictureBox2.Left = pictureBox1.Width + 40;

}

{

public partial class Form1 : Form

{

public Capture cap;

private bool capInProgress;

int i = 0;

public Form1()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

pictureBox2.Left = pictureBox1.Width + 40;

}

private void ProcessFrame(object sender, EventArgs arg)

{

pictureBox1.Image = cap.QueryFrame().Bitmap;

}

private void button1_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

{

pictureBox1.Image = cap.QueryFrame().Bitmap;

}

private void button1_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

if (cap != null)

{

pictureBox1.Size = cap.QueryFrame().Bitmap.Size;

pictureBox2.Left = pictureBox1.Width + 40;

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

button1.Text = "開始攝像";

//timer1.Enabled = false;

}

else

{

//start the capture

button1.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

//timer1.Enabled = true;

}

capInProgress = !capInProgress;

}

}

private void button2_Click(object sender, EventArgs e)

{

pictureBox2.Size = pictureBox1.Size;

pictureBox2.Image= cap.QueryFrame().Bitmap;

}

{

pictureBox1.Size = cap.QueryFrame().Bitmap.Size;

pictureBox2.Left = pictureBox1.Width + 40;

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

button1.Text = "開始攝像";

//timer1.Enabled = false;

}

else

{

//start the capture

button1.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

//timer1.Enabled = true;

}

capInProgress = !capInProgress;

}

}

private void button2_Click(object sender, EventArgs e)

{

pictureBox2.Size = pictureBox1.Size;

pictureBox2.Image= cap.QueryFrame().Bitmap;

}

private void button3_Click(object sender, EventArgs e)

{

string name = DateTime.Now.ToString("yyyy-MM-dd tt hh-mm-ss") ;

try

{

//SaveFileDialog saveFileDialog1 = new SaveFileDialog();

saveFileDialog1.Filter = "Bitmap Image|*.bmp";

saveFileDialog1.FileName = name;

saveFileDialog1.Title = "儲存圖片";

saveFileDialog1.ShowDialog();

if (saveFileDialog1.FileName != "")

{

System.IO.FileStream fs = (System.IO.FileStream)saveFileDialog1.OpenFile();

{

string name = DateTime.Now.ToString("yyyy-MM-dd tt hh-mm-ss") ;

try

{

//SaveFileDialog saveFileDialog1 = new SaveFileDialog();

saveFileDialog1.Filter = "Bitmap Image|*.bmp";

saveFileDialog1.FileName = name;

saveFileDialog1.Title = "儲存圖片";

saveFileDialog1.ShowDialog();

if (saveFileDialog1.FileName != "")

{

System.IO.FileStream fs = (System.IO.FileStream)saveFileDialog1.OpenFile();

switch (saveFileDialog1.FilterIndex)

{

case 1:

this.pictureBox1.Image.Save(fs,

System.Drawing.Imaging.ImageFormat.Bmp);

break;

}

{

case 1:

this.pictureBox1.Image.Save(fs,

System.Drawing.Imaging.ImageFormat.Bmp);

break;

}

fs.Close();

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}

}

}

}

}

catch (Exception ex)

{

MessageBox.Show(ex.Message);

}

}

}

}

camera05 照片可找臉

設計頁面 :

程式碼 :

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

namespace camera05

{

public partial class Form1 : Form

{

public Form1()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

//pictureBox1.Size = pictureBox1.Image.Size;

}

private void button1_Click(object sender, EventArgs e)

{

//Application.EnableVisualStyles();

//Application.SetCompatibleTextRenderingDefault(false);

Run(ref pictureBox1);

}

{

//Application.EnableVisualStyles();

//Application.SetCompatibleTextRenderingDefault(false);

Run(ref pictureBox1);

}

static void Run(ref PictureBox p)

{

Mat image = new Mat("../02.jpg", LoadImageType.Color); //Read the files as an 8-bit Bgr image

long detectionTime;

List<Rectangle> faces = new List<Rectangle>();

List<Rectangle> eyes = new List<Rectangle>();

//The cuda cascade classifier doesn't seem to be able to load "haarcascade_frontalface_default.xml" file in this release

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

foreach (Rectangle face in faces)

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

//display the image

p.Image = image.Bitmap;

p.Image = image.Bitmap;

}

public static void Detect(Mat image, String faceFileName, String eyeFileName, List<Rectangle> faces,

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

public static void Detect(Mat image, String faceFileName, String eyeFileName, List<Rectangle> faces,

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

#if !(IOS || NETFX_CORE)

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

//Read the HaarCascade objects

using (CascadeClassifier face = new CascadeClassifier(faceFileName))

using (CascadeClassifier eye = new CascadeClassifier(eyeFileName))

{

watch = Stopwatch.StartNew();

using (UMat ugray = new UMat())

{

CvInvoke.CvtColor(image, ugray, Emgu.CV.CvEnum.ColorConversion.Bgr2Gray);

//normalizes brightness and increases contrast of the image

CvInvoke.EqualizeHist(ugray, ugray);

CvInvoke.EqualizeHist(ugray, ugray);

//Detect the faces from the gray scale image and store the locations as rectangle

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

faces.AddRange(facesDetected);

foreach (Rectangle f in facesDetected)

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

foreach (Rectangle e in eyesDetected)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

}

}

}

camera06 攝影截圖可找臉

設計頁面:

程式碼 :

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

namespace camera06

{

public partial class camera06 : Form

{

public Capture cap;

private bool capInProgress;

Mat mat_face;

public camera06()

{

InitializeComponent();

}

{

public partial class camera06 : Form

{

public Capture cap;

private bool capInProgress;

Mat mat_face;

public camera06()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

{

}

private void btn攝影_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

private void btn攝影_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

{

MessageBox.Show(excpt.Message);

}

}

#endregion

if (cap != null)

{

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

btn攝影.Text = "開始攝像";

{

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

btn攝影.Text = "開始攝像";

//timer1.Enabled = false;

}

else

{

//start the capture

btn攝影.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

//timer1.Enabled = true;

}

capInProgress = !capInProgress;

}

}

private void ProcessFrame(object sender, EventArgs arg)

{

pictureBox1.Image = cap.QueryFrame().Bitmap;

}

}

else

{

//start the capture

btn攝影.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

//timer1.Enabled = true;

}

capInProgress = !capInProgress;

}

}

private void ProcessFrame(object sender, EventArgs arg)

{

pictureBox1.Image = cap.QueryFrame().Bitmap;

}

private void btn截圖_Click(object sender, EventArgs e)

{

pictureBox2.Image = cap.QueryFrame().Bitmap;

mat_face= cap.QueryFrame();

}

{

pictureBox2.Image = cap.QueryFrame().Bitmap;

mat_face= cap.QueryFrame();

}

private void btn找臉_Click(object sender, EventArgs e)

{

{

Run(ref mat_face);

pictureBox2.Image = mat_face.Bitmap;

}

static void Run(ref Mat mat_f)

{

Mat image = mat_f; //Read the files as an 8-bit Bgr image

long detectionTime;

List<Rectangle> faces = new List<Rectangle>();

List<Rectangle> eyes = new List<Rectangle>();

pictureBox2.Image = mat_face.Bitmap;

}

static void Run(ref Mat mat_f)

{

Mat image = mat_f; //Read the files as an 8-bit Bgr image

long detectionTime;

List<Rectangle> faces = new List<Rectangle>();

List<Rectangle> eyes = new List<Rectangle>();

//The cuda cascade classifier doesn't seem to be able to load "haarcascade_frontalface_default.xml" file in this release

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

foreach (Rectangle face in faces)

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

//display the image

mat_f = image;

mat_f = image;

}

public static void Detect(Mat image, String faceFileName, String eyeFileName, List<Rectangle> faces,

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

public static void Detect(Mat image, String faceFileName, String eyeFileName, List<Rectangle> faces,

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

#if !(IOS || NETFX_CORE)

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

//Read the HaarCascade objects

using (CascadeClassifier face = new CascadeClassifier(faceFileName))

using (CascadeClassifier eye = new CascadeClassifier(eyeFileName))

{

watch = Stopwatch.StartNew();

using (UMat ugray = new UMat())

{

CvInvoke.CvtColor(image, ugray, Emgu.CV.CvEnum.ColorConversion.Bgr2Gray);

//normalizes brightness and increases contrast of the image

CvInvoke.EqualizeHist(ugray, ugray);

CvInvoke.EqualizeHist(ugray, ugray);

//Detect the faces from the gray scale image and store the locations as rectangle

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

faces.AddRange(facesDetected);

foreach (Rectangle f in facesDetected)

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

foreach (Rectangle e in eyesDetected)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

}

}

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

}

}

camera07 可攝影同時找臉

設計頁面:

程式碼 :

using System;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

using System.Collections.Generic;

using System.ComponentModel;

using System.Data;

using System.Drawing;

using System.Linq;

using System.Text;

using System.Threading.Tasks;

using System.Windows.Forms;

//

using System.Diagnostics;

using System.IO;

using Emgu.CV;

using Emgu.CV.CvEnum;

using Emgu.CV.UI;

using Emgu.CV.Structure;

using Emgu.Util;

using Emgu.CV.ML;

using Emgu.CV.Cuda;

namespace camera07

{

public partial class Form1 : Form

{

public Capture cap;

private bool capInProgress;

Mat _mat;

public Form1()

{

InitializeComponent();

}

{

public partial class Form1 : Form

{

public Capture cap;

private bool capInProgress;

Mat _mat;

public Form1()

{

InitializeComponent();

}

private void Form1_Load(object sender, EventArgs e)

{

{

}

private void btn攝像_Click(object sender, EventArgs e)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

{

#region if capture is not created, create it now

if (cap == null)

{

try

{

cap = new Capture();

}

catch (NullReferenceException excpt)

MessageBox.Show(excpt.Message);

}

}

#endregion

if (cap != null)

{

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

btn攝像.Text = "開始攝像";

}

else

{

//start the capture

btn攝像.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

}

capInProgress = !capInProgress;

}

}

{

if (capInProgress)

{ //stop the capture

Application.Idle -= new EventHandler(ProcessFrame);

btn攝像.Text = "開始攝像";

}

else

{

//start the capture

btn攝像.Text = "停止";

Application.Idle += new EventHandler(ProcessFrame);

}

capInProgress = !capInProgress;

}

}

private void ProcessFrame(object sender, EventArgs arg)

{

//pictureBox1.Image = cap.QueryFrame().Bitmap;

_mat = cap.QueryFrame();

Run(ref _mat);

pictureBox1.Image = _mat.Bitmap;

}

{

//pictureBox1.Image = cap.QueryFrame().Bitmap;

_mat = cap.QueryFrame();

Run(ref _mat);

pictureBox1.Image = _mat.Bitmap;

}

static void Run(ref Mat mat_f)

{

Mat image = mat_f; //Read the files as an 8-bit Bgr image

long detectionTime;

List<Rectangle> faces = new List<Rectangle>();

List<Rectangle> eyes = new List<Rectangle>();

{

Mat image = mat_f; //Read the files as an 8-bit Bgr image

long detectionTime;

List<Rectangle> faces = new List<Rectangle>();

List<Rectangle> eyes = new List<Rectangle>();

//The cuda cascade classifier doesn't seem to be able to load "haarcascade_frontalface_default.xml" file in this release

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

//disabling CUDA module for now

bool tryUseCuda = false;

bool tryUseOpenCL = true;

Detect(

image, "../haarcascade_frontalface_default.xml", "../haarcascade_eye.xml",

faces, eyes,

tryUseCuda,

tryUseOpenCL,

out detectionTime);

//臉

foreach (Rectangle face in faces)

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//眼睛

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

foreach (Rectangle face in faces)

CvInvoke.Rectangle(image, face, new Bgr(Color.Red).MCvScalar, 2);

//眼睛

//foreach (Rectangle eye in eyes)

// CvInvoke.Rectangle(image, eye, new Bgr(Color.Blue).MCvScalar, 2);

//display the image

mat_f = image;

mat_f = image;

}

public static void Detect(Mat image, String faceFileName, String eyeFileName, List<Rectangle> faces,

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

List<Rectangle> eyes, bool tryUseCuda, bool tryUseOpenCL, out long detectionTime)

{

Stopwatch watch;

#if !(IOS || NETFX_CORE)

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

if (tryUseCuda && CudaInvoke.HasCuda)

{

using (CudaCascadeClassifier face = new CudaCascadeClassifier(faceFileName))

using (CudaCascadeClassifier eye = new CudaCascadeClassifier(eyeFileName))

{

face.ScaleFactor = 1.1;

face.MinNeighbors = 10;

face.MinObjectSize = Size.Empty;

eye.ScaleFactor = 1.1;

eye.MinNeighbors = 10;

eye.MinObjectSize = Size.Empty;

watch = Stopwatch.StartNew();

using (CudaImage<Bgr, Byte> gpuImage = new CudaImage<Bgr, byte>(image))

using (CudaImage<Gray, Byte> gpuGray = gpuImage.Convert<Gray, Byte>())

using (GpuMat region = new GpuMat())

{

face.DetectMultiScale(gpuGray, region);

Rectangle[] faceRegion = face.Convert(region);

faces.AddRange(faceRegion);

foreach (Rectangle f in faceRegion)

{

using (CudaImage<Gray, Byte> faceImg = gpuGray.GetSubRect(f))

{

//For some reason a clone is required.

//Might be a bug of CudaCascadeClassifier in opencv

using (CudaImage<Gray, Byte> clone = faceImg.Clone(null))

using (GpuMat eyeRegionMat = new GpuMat())

{

eye.DetectMultiScale(clone, eyeRegionMat);

Rectangle[] eyeRegion = eye.Convert(eyeRegionMat);

foreach (Rectangle e in eyeRegion)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

}

watch.Stop();

}

}

else

#endif

{

//Many opencl functions require opencl compatible gpu devices.

//As of opencv 3.0-alpha, opencv will crash if opencl is enable and only opencv compatible cpu device is presented

//So we need to call CvInvoke.HaveOpenCLCompatibleGpuDevice instead of CvInvoke.HaveOpenCL (which also returns true on a system that only have cpu opencl devices).

CvInvoke.UseOpenCL = tryUseOpenCL && CvInvoke.HaveOpenCLCompatibleGpuDevice;

//Read the HaarCascade objects

using (CascadeClassifier face = new CascadeClassifier(faceFileName))

using (CascadeClassifier eye = new CascadeClassifier(eyeFileName))

{

watch = Stopwatch.StartNew();

using (UMat ugray = new UMat())

{

CvInvoke.CvtColor(image, ugray, Emgu.CV.CvEnum.ColorConversion.Bgr2Gray);

//normalizes brightness and increases contrast of the image

CvInvoke.EqualizeHist(ugray, ugray);

CvInvoke.EqualizeHist(ugray, ugray);

//Detect the faces from the gray scale image and store the locations as rectangle

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

//The first dimensional is the channel

//The second dimension is the index of the rectangle in the specific channel

Rectangle[] facesDetected = face.DetectMultiScale(

ugray,

1.1,

10,

new Size(20, 20));

faces.AddRange(facesDetected);

foreach (Rectangle f in facesDetected)

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

{

//Get the region of interest on the faces

using (UMat faceRegion = new UMat(ugray, f))

{

Rectangle[] eyesDetected = eye.DetectMultiScale(

faceRegion,

1.1,

10,

new Size(20, 20));

foreach (Rectangle e in eyesDetected)

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

}

}

{

Rectangle eyeRect = e;

eyeRect.Offset(f.X, f.Y);

eyes.Add(eyeRect);

}

}

}

}

watch.Stop();

}

}

detectionTime = watch.ElapsedMilliseconds;

}

}

}

因為是寒假寫的程式

現在匆匆整理出來還有很多步驟不足

之後再補充囉

訂閱:

意見 (Atom)